Seq2Seq (Work in progress)

The Long Short Term Memory Network

from tensorflow.keras.layers import LSTM

LSTM(units, return_sequences=True, return_state=True)

- units: Positive integer, dimensionality of the output space.

- You can (sort of) think of it exactly as you think of fully connected layers. Units are neurons

- The dimension of the output is the number of neurons, as with most of the well known layer types

- The difference is that in LSTMs, these neurons will not be completely independent of each other, they will intercommunicate due to the mathematical operations lying under the cover

Credit: https://stackoverflow.com/questions/51932767/how-to-interpret-clearly-the-meaning-of-the-units-parameter-in-keras

- return_sequences: Boolean. Whether to return the last output. in the output sequence, or the full sequence. Default: False.

- return_state: Boolean. Whether to return the last state in addition to the output. Default: False.

Sequential Memory

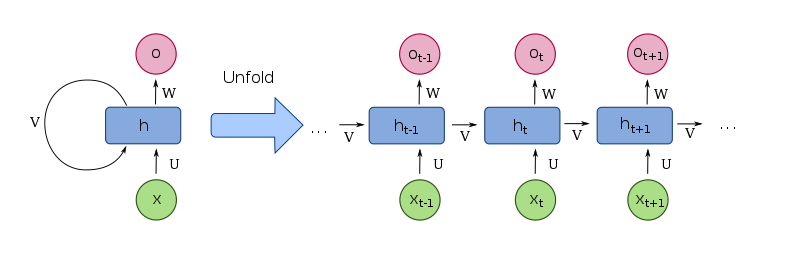

RNN Input Layers, Hidden Layers, and Output Layers

- RNNs have loops ensuring information persistence

- RNN Unfolded / Unrolled

- x: input vector

- h: hidden layer vector

- o: output vector

- U, V, W: weights of the network

- σ: activation functions

- https://www.greeksymbols.net/sigma-symbol

Credit: https://en.wikipedia.org/wiki/File:Recurrent_neural_network_unfold.svg

Credit: https://en.wikipedia.org/wiki/File:Recurrent_neural_network_unfold.svg

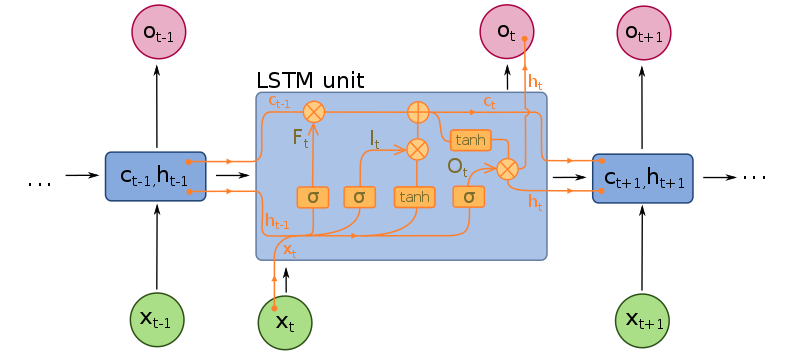

- LSTM unit

Credit: https://en.wikipedia.org/wiki/File:Long_Short-Term_Memory.svg

Credit: https://en.wikipedia.org/wiki/File:Long_Short-Term_Memory.svg - LSTM prevents backpropagated errors from vanishing or exploding

- A common LSTM unit is composed of a cell, an input gate, an output gate and a forget gate. The cell remembers values over arbitrary time intervals and the three gates regulate the flow of information into and out of the cell. (Wikipedia: https://en.wikipedia.org/wiki/Long_short-term_memory)

- Gates learn which information to keep or discard

- forget gates

- input gates

- output gates